First approach to Memory Allocation05/24/2009

Linear and Physical domains

When we express memory addresses as Linear (0 to 2^32 -1) we say them to be in the "Linear Domain". Addresses expressed as physical (as wired in the circuit) are said to be in the "Physical Domain".

Since Linear-to-Physical address translation is done as part of the bus cycle (using the Trans Matrix), the physical domain is mostly restricted to that low level context. General purpose registers and even the PC (Program Counter) manage Linear Addresses instead of Physical ones. We can say that "our CPU works in the Linear Domain".

Memory allocation, however, is done in the Physical Domain since it involves Trans Matrix population and efficient ways of using the available physical memory, as we shall see.

Memory Allocation

The unit of memory allocation is the Page Frame (4KB of contiguous bytes in physical memory). For the CPU, an alloc operation involves not much but adding entries to the Trans Matrix. Once in the Matrix, referred pages can be accessed throughout the Paging mechanism at the CPU circuit level as part of the ordinary bus cycles.

The CPU must provide a supervisor instruction similar to this:

mem_alloc page, control

Where:

Page Physical address of the Page Frame

Control Bitmapped control fields and PROC #.

System software is responsible for keeping track of allocation. This involves (among others):

-- Distribution of physical memory among different processes (Effort must be made to avoid collisions).

-- Finding the correct physical blocks size for efficient use of memory.

-- De-allocation

Contiguousness in Linear and Physical domains

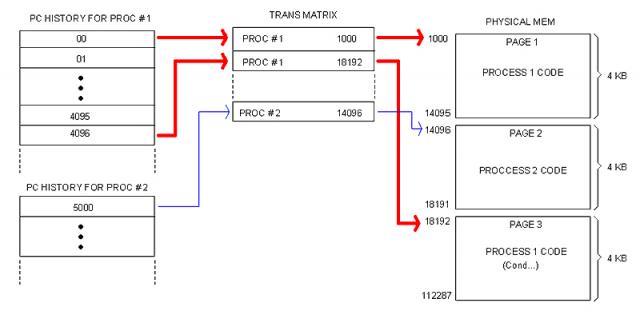

We have to distinguish between contiguous Linear address and contiguous Physical addresses. A code segment without branches, for instance, always occupies a contiguous block in the Linear domain but not necessarily in the Physical domain as illustrated in the following image.

This will possibly be a common scenario in real life. Notice that memory fragmentation is hidden to the program code (which is in Linear domain), meaning that System software working with these issues must work in the Physical domain instead. Hence the need for Physical-Domain instructions provided by the CPU.

Allocating Processes

When a process is loaded, memory is allocated to store the code and leave room for the process data. Since I am not providing support for a segmented model, both code and data will share a monolithic block in Linear space.

The upper portion (lowest addresses) contains the code; the data block follows and extends all the way down to the bottom of the designated space.

At loading time the Data block is only the space remaining in the last page occupied by the code. The process code is responsible for requesting memory to the OS is needed. The OS will allocate requested memory as contiguous as possible just below. This way, the space designated to the process will grow indefinitely only limited by the amount of physical memory available at allocation time.

The way processes utilize their data space is opt to the language Compiler. The OS and/or the CPU can help with support for different techniques (stack, heap, etc) but they won't dictate the way programming languages manage their data.

Linear Relative Address (RLA) and Program Counter (PC)

We have established that applications believe they own the entire Linear space. Such a believe is more than an illusion: actually, there is no other Linear space but that given to the application at run-time. We can see the Linear space as being "multi-dimensional": The Trans Matrix contains all its "dimensions": one for each application running at a given time.

It follows that applications supply only Linear addresses and those are not absolute but relative to the beginning of the designated block in memory. In other words, the CPU interprets addresses supplied by the application as offsets within the designated code-data block. We call this a "Relative Linear Address" (RLA).

Application code is linked to produce RLAs. The first RLA (where execution starts) is always cero. The address where the Data block starts (following the Code block) is known at Link-Time, so addresses for instructions such as "MOV A, ADDR" can be resolved by the Linker and they are also given as RLAs (relative to the beginning of the Code block).

This has implications to the Program Counter (PC).

Fetch bus cycles will perform address translation from a given a Linear address. But we just said that application code always starts in address cero. Since (at application run-time) there is no Linear space other than the one owned by the application, then the RLA becomes the actual address to be fetched. Therefore, the content of PC can't be other but the RLA provided by the application code.

When paging is enabled (that is, when not in Kernel mode) there is no need for a CS register. No need for a DS register either. I can still provide support for stacks (a SP reg and associated PUSH/POP instructions, for example) but that will be a plus, not strictly required by the conceptual model.

|