Phase 1: ResearchReading - Day One

05/15/2009

I expected my book to arrive by Monday but it came today (Friday). Its title: "Formal Models of Operating System Kernels" by Iain D. Craig. Not sure if it will be useful at this moment: the book is essentially theoretical and consequently dense.

To start with, it employs a notation called "Object-Z" for describing kernel approaches in a mathematical form so their validity (or feasibility) can be proven "on paper"; the whole books is full of that. I would have to start with learning "Object-Z" notation from other source because the author assumed that I already know such a bizarre thing. Nevertheless I found it to be a pretty interesting stuff... but not for now.

So I turned to "The Linux Kernel Online Book" (http://www.tldp.org/LDP/tlk/tlk.html ) and it was good. I read about Memory Managing, Virtual Memory and that kind of stuff. As I read, I couldn't avoid to figure out in the background of my mind how to implement all that in my own computer but I understand that this is not the time for even thinking about it.

I don't think I will continue to keep track of my readings in here. If I wanted to make this comment it was only because today is day one.

The Microprocessor temptation

05/17/2009

I see that modern "mini-computers" such as the IBM AS/400 are based on Microprocessors, so what wrong with doing so for my project? Actually, it would be funny because the "fun" were in to escape from Microprocessors in the first place.

But what do I really want to build? The fastest computer in the World or the most primitive one? A replica of the IBM360 or a bizarre computer that just lights LEDs and makes noise when is not crashing?

What do I actually mean by "Mini-Computer" and what is the underlying motivation of my project?

Well, I believe that "Mini-Computer" is a machine that is somewhat between a PC and a "Super-Computer". A machine that is affordable but is not hardware compatible with PC-industry components such as NIC and video cards... just to say.

So where is the fun? It is (I believe) in actually designing the machine. Components to use are not the matter. The point is to define (or adopt) an architecture making the pertinent decisions to full fit the envisioned design goals and specifications. A Microprocessors will fix much of the work, but not the whole, not the design per se.

The motivation is in to learn Computer Science throughout a hands-on project. And the project is to produce a manageable design and, eventually, to build a functional computer. This is not much different from any College student's project.

In this respect, I don't think Microprocessors would help me much. Today's microprocessors are very complex. Being this my first Computer Science project it has to be manageable, so it must be simple.

Nevertheless, this may change...

Wake up!

05/19/2009

So... your first computer will be capable of running a ported multi-user, multi-tasking, open-source compatible operating system... Come on, Armando, you are dreaming, wake up!

Indeed, too ambitious for a first try, so lets land safely on earth and start rethinking the whole thing from the beginning.

What would be a realistic approach for my homebrew mini-computer? One that is manageable but allows me at the same time to explore those techniques that intrigues me the most. I would say that the following are the topics I cannot miss not even in the first try:

- Virtual Memory and Protected Mode

- Peripherals

- Kernel development

I can get each of those to its simplest forms.

- Virtual Memory: Single translation table (no "directories"). Few control fields.

- Peripherals: Just one RS232 port so the machine can communicate with my PC using a Terminal Emulator program such as Hyper Terminal.

- Kernel development: My opportunity to just get started in that matter. This is possibly the most complicated of all but happens to be the most interesting to me... can't miss it!

So here is how my machine starts to looks like:

From outside: Bunch of cards inserted into a frame. A traditional mini-computer front panel with lot of switches and LEDs. A serial port to plug in the outside world.

From inside: A short provision of static RAM. A memory management circuit providing the necessary address translation. An interrupt controller that for now deals only with the UART. Some registers, ALU etc.

Needless to say that I am not making a decision yet. I'm just thinking...

Considering FPGA

05/20/2009

Since this will be an experimental computer (kind of personal laboratory), FPGA seems to be the obvious option for its base technology. Once the machine has been built, I would be able to teak the circuits at not cost without even opening the case. Besides, it is a good opportunity for me to get started with this technology.

However, FPGAs don't come in DIP encapsulations much less with .100 mil inter-legs spacing, a little detail that takes me away from the traditional cheap prototyping boards that I know. There are prototyping boards for FPGAs, indeed, and they come will cool and convenient features such as USB, Parallel ports, Flash memory etc, but they are consequently expensive.

I had never thought in wasting my time making custom PCB cards, but that could be an alternative to the expensive FPGA prototyping boards, though PCB design doesn't sound too much attractive to me. In such case I would have to discart SRAM FPGA at once to focus on flash-based ones only.

So the FPGA pick is not as obvious as it seemed at first though still a very atractive one.

Simplified Memory Management and Protection

05/22/2009

I have now a better understanding of Memory Management and General Protection though I'm far from been an expert in the matter.

I have been thinking all the time (in the background of my mind) on how to implement all that in my computer, but now it occurred to me that a better try would be, perhaps, to design a simplified version of memory management and general protection.

This way, I'll be able to play with those concepts in a manageable way and ultimately get to understand throughout practice the necessity of the more complex implementation that it has in the real world.

05/23/2009

I worked out the idea a litte bit. My findings are in the Note: "Simplified Memory Management":

index.php?branch=56

Memory Allocation

05/24/2009

These days I've been reading and thinking about memory allocation. As usual, I occasionally turned to Bill Buzbee Magic-1 computer for inspiration. I see that he has limited processes memory to 128K spited into two segments: 64KB code and 64KB data; the available linear address space gets distributed this way among different processes.

Actually, I'm seeking a different approach. I have established the premise that each process should believe it owns the entire 4GB linear space. Consequently, the CPU should interpret linear addresses provided by processes as nothing but off-sets within the designated physical memory space. My premise also implies that memory given to processes should not be limited.

This has been turned into kind of nightmare to me. I'm kind of lost between several decisions pending to be made: Whether to support variable-length structures from the CPU; whether to manage a Heap: a global heap or one per process... that kind of things.

Managing variable-length structures is complicated, so it contradicts my premise of keeping things simple at first though leaving room for further development. And yet there are many other things concerning Memory Allocation, such as fragmentation, cache and swaping.

My task for now is to figure out how a real program will run in my machine, and then decide who in the chain (CPU, OS, Compiler, Application code) will be taking care of which part.

05/25/2009

I worked this out and could get to some conclussions. See note "First Approach to Memory Allocation":

index.php?branch=66

Scaling down

05/26/2009

My "first approach to implementation" proved to be unscalable (in practical sense at least). However it seems conceptually valid to me. I don't want to discard the "all-for-one" policy nor the "multi-dimensional Trans Matrix" because those ideas still look fine to me.

Nevertheless I looked for different approaches. I saw for instance that real-world systems place Page Tables in Main Memory (not in separate circuits as Magic-1 does) and that the OS takes care of memory allocation and that kind of complicated things.

My focus during this research phase is in hardware design: I need to produce the specification for the hardware. Software development is far, far away in the future; however, I have to leave room in the specification for that future. That is my challenge.

Most of the time, then, the decision making is about who is going to take care of what (and more or less, how) so I could specify the right CPU support for the system software to come.

Back to my "First Approach to Implementation", what I decided today was to scale the architecture down to manageable figures. I don't plan to make a desktop machine; this will be a mainframe-style computer... no video card, no mouse... ok?

My findings are in this note:

index.php?branch=75

System Calls

05/27/2009

Today I asked to my self the obvious question of how system calls are actually made.

As usual, I brain-stormed my self for a few minutes before going to the books. I imagined each process code containing a copy of some kernel interface: set of functions which relative addresses were well known by the Linker so they could be called from user code. These little functions responded by switching the CPU into Kernel mode before serving the request.

Technical littérateur told me later a different tale. In Linux, user processes make system calls by raising interrupt 0x80 which is handled by the Kernel. Application programmers, however, use wrapper C functions so they don't deal with the interrupts directly.

That tale is, of course, the short answer to my question. I expended longer reading about this and other related topics. And it was while reading about "real things" than my crazy idea of a "hardware-isolated kernel" arose once gain, making better sense to me now than before. However I don't want to workout this bizarre idea for now.

System calls took me to interrupts which in turn turned me to User/Kernel modes switching. Thinking about this I came with the surprising idea that, in my machine (an thanks to the Multi-Dimensional Matrix idea) I can treat Kernel code with less reverence than usual... as pointed out in the following note:

index.php?branch=87

The Matrix takes me to estrange roads

05/28/2009

The "Multi-Dimensional Matrix" has taken me to a series of concepts that I truly like. Those are:

- Kernel as a privileged process (not the ubiquitous God)

- Paging as the only way to address physical memory

- Super-Linear space

- Kernel addressing the Matrix in Super-Linear space.

These ideas are indeed "suspicious" since they are not present in real-life systems (as long as I know). I wanted to explore them in dept, however, because as per today they make perfect sense to me, so why to discard them?

Nevertheless, must likely I will design the CPU so Paging can be disabled. After all, I won't be able to experiment with software until the hardware is there so it's better to have all possible choices available.

The first Sketch for the CPU architecture

05/29/2009

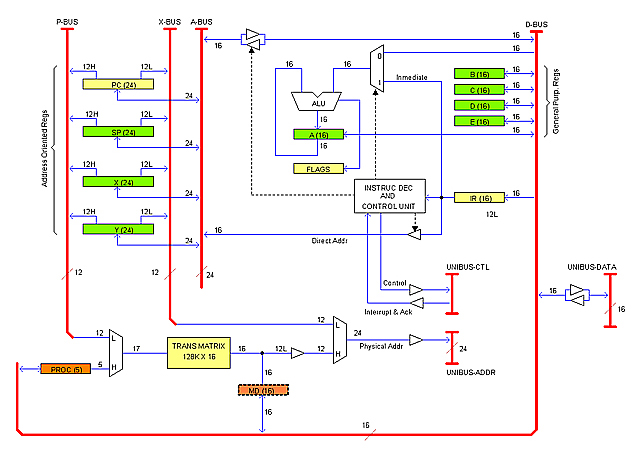

Today I could draw the first sketch of the CPU architecture or block diagram. It was surprisingly difficult; I expected to have a better idea on how to put together all of the ideas developed so far. But it was done any ways and it seems promising to me. By the way, I also came with a name for my computer: MDM-24 (stands for "Multi-Dimensional-Matrix" and 24 refers to the size of the Addr Bus). I like it because is similar to "PDP-11".

This is not actually the time for coming with a design, not even a block diagram. What is scheduled to do now is a specification. However, drawing the architecture for the first time got me a closer approach to implementation details that I feel useful to know in advance before writing the spec.

Anyways, what I drawn today is only a sketch that surely will change dramatically in the near future.

Here it is:

Resizing the Matrix

05/31/2009

Today I did two things: to read about mainframes and to play with different sizes for my Trans Matrix using a little utility that I improvised yesterday.

My readings were as in a tale of castles and prices. Now I realize why I don't like PCs: the real action is into mainframe engineering... but anyways, this is not about my professional frustrations.

As per the Matrix, it is almost decided that my computer will address words (16 bits), not bytes. Here are the results for different Matrix configurations:

-------------- CONFIGURATION # 1 -------------------------------------------

Max Memory : 32 MB

Page Frame size : 1 Kw (2KB)

Max simultaneous processes : 16

LINEAR ADDRESS: Width: 24 => Page field : 14 Offset field : 10

MATRIX ENTRY : Width: 20 => Control bits: 6 Physical Addr fld: 14

Matrix Size: 256K X 20 = 640 KB

-------------- CONFIGURATION # 2 -------------------------------------------

Max Memory : 8 MB

Page Frame size : 1 Kw (2KB)

Max simultaneous processes : 16

LINEAR ADDRESS: Width: 22 => Page field : 12 Offset field : 10

MATRIX ENTRY : Width: 16 => Control bits: 4 Physical Addr fld: 12

Matrix Size: 64K x 16 = 128 KB

-------------- CONFIGURATION # 3 -------------------------------------------

Max Memory : 4 MB

Page Frame size : 1 Kw (2KB)

Max simultaneous processes : 16

LINEAR ADDRESS: Width: 21 => Page field : 11 Offset field : 10

MATRIX ENTRY : Width: 16 => Control bits: 5 Physical Addr fld: 11

Matrix Size: 32K X 16 = 64 KB

-------------- CONFIGURATION # 4 -------------------------------------------

Max Memory : 2 MB

Page Frame size : 1 Kw (2KB)

Max simultaneous processes : 16

LINEAR ADDRESS: Width: 20 => Page field : 10 Offset field : 10

MATRIX ENTRY : Width: 16 => Control bits: 6 Physical Addr fld: 10

Matrix Size: 16K X 16 = 32 KB

----------------------------------------------------------------------------

This last seems close enough to reality.

Writing the Spec

06/01/2009

Research Phase is over. I am in the task of writing the Spec for hardware design. I am surprised that research have taken so short period of time. I think it was for two reasons: (1) I have devoted generous amount of time to it. (2) I left OS development matters for later.

In fact, my goal in this phase is to get specs for the hardware design (next phase). I finally could set the frontier between hardware support and OS job... that's helped. The hardware I'm picturing presents support for Multi-tasking and General Protection, and that's exactly what I needed for now, no more than that.

I also got the oficial name for my homebrew mini-computer: Heritage-1 (or "/1"; not sure if dash or forward slash). Heritage because... well, you know.

Research is over. Did I said that? Not quite... research never ends. I've done only the easy part. The real fun is yet to come.

|